Faisal

Bengaluru, Bangalore Division, Karnataka, India

Experience: 2 years

Open to: Full-Time

Education: Bachelors

Availability: Within 30 Days

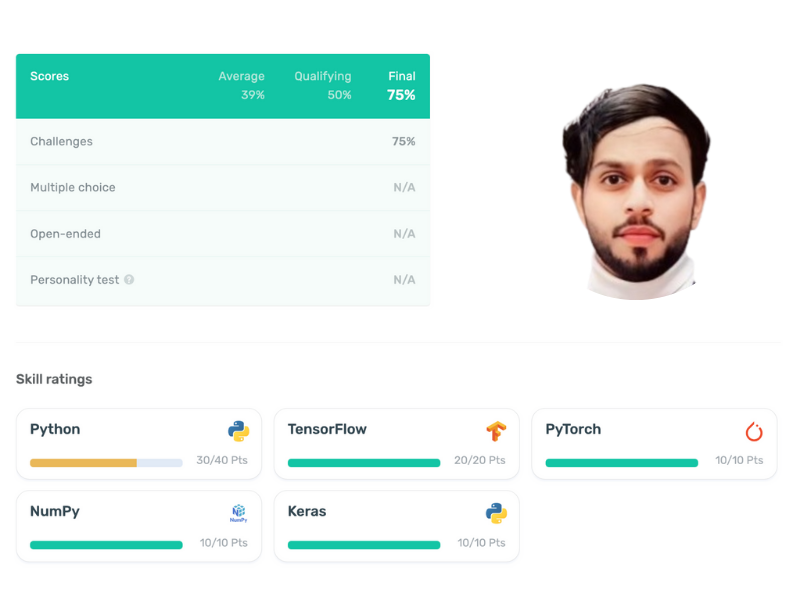

Skills: AWS, Docker, GCP, Jira, Jupyter Notebooks, Kafka, Kubernetes, LLMs/ChatGPT, Matplotlib, MySQL, pandas, Python, Python3, PyTorch, scikit-learn, Selenium, TensorFlow

Previously worked at: Fortune 500

Assessment Score: 75

- Educational Background

Holds a B.Tech in Electronics and Communication Engineering from I G Engineering College, Sagar (2019-2023), with a strong foundation in AI and machine learning techniques applied across various projects. - Professional Experience

Over 2 years of experience, including a 10-month internship and a current position as a Data Scientist at Globussoft Technologies, Bangalore. Experience includes developing and deploying machine learning models, implementing NLP and computer vision models, and working with advanced deep learning techniques for real-time applications. - Key Skills and Expertise

Proficient in Python, SQL, machine learning, deep learning, natural language processing (NLP), and generative AI. Skilled in web scraping, OCR, Flask/Fast API development, and using AWS for cloud-based solutions, with a focus on data mining and implementing AI-driven systems. - AI/ML Projects and Achievements

Led the development of several impactful AI projects, including brand logo identification using transfer learning, sentiment analysis, object detection, and employee performance anomaly detection. Also contributed to projects involving face recognition, search-by-image models, and URL categorization for enhanced business insights. - Research and Internships

Completed a research traineeship at DRDO-ADE, where multiple machine learning models for aerial target tracking were successfully implemented. Co-authored a technical paper presented at IEEE-IPTA 2023 in Paris. Also interned as a software engineer at Caravel Info Systems, contributing to face recognition model deployments. - Technological Contributions and Tools

Expertise in developing and deploying scalable solutions using tools such as Elasticsearch, Kafka, Redis, and TensorFlow. Hands-on experience in creating advanced APIs for deployment and integration, automating data pipelines, and enhancing system performance with Prometheus and Grafana for observability.